If you are using VMware Esxi hypervisor version 6.5 U1 build number 5969303 on standalone server, Nutanix HCI platform then you need to upgrade the VMware Esxi 6.5 U1 to next recommended compatible / supported version e.g VMware Esxi version 6.7 U1 / U2 as per your infrastructure because this Esxi version 6.5 U1 has known bug of high CPU consumption / usage issue on host without any workload.

VMware ESXi 6.5 U1 high CPU consumption / usage bug is fixed in later releases e.g 6.5 U3 or later. So it recommended to upgrade the VMware ESXi version 6.5 U1 to latest / near latest one if possible.

VMware Esxi 6.5 U1 Known Issues

VMware Esxi 6.5 U1 build number 5969303 has following known issues as following

Installation Issues

- The installation of unsigned VIB with –no-sig check option on a secure boot enabled ESXi host might fail

- Installing unsigned VIBs on a secure booted ESXi host will be prohibited because the unsigned vibs prevents the system from booting. The VIB signature check is mandatory on a secure booted ESXi host.

- esxcli software vib update -n esx-base -n vsan -n vsanhealth -n esx-tboot -d /vmfs/volumes/datastore1/update-from-esxi6.5-6.5_update01.zip

- Remediation against an ESX 6.5 Update 1 baseline might fail on an ESXi host with enabled secure boot

- If you use vSphere Update Manager to upgrade an ESXi host by an upgrade baseline containing an ESXi 6.5 Update 1 image, the upgrade might fail if the host has secure boot enabled.

Workaround: You can use a vSphere Update Manager patch baseline instead of a host upgrade baseline.

Miscellaneous Issues

- The upgrade in BIOS, BMC, and PLSA firmware versions are not displayed on the Direct Console User Interface (DCUI)

- The DCUI provides information about the hardware versions and when they change, the DCUI remains blank.

Networking Issues

- Intel i40en driver does not allow you to disable hardware due to stripping the RX VLAN tag capability

- For Intel i40en driver, the hardware always strips the RX VLAN tag and you cannot disable it using the following vsish command:

- Adding a Physical Uplink to a Virtual Switch in the VMware Host Client Might Fail

- Adding a physical network adapter, or uplink, to a virtual switch in the VMware Host Client might fail if you select Networking > Virtual switches> Add uplink.

Storage Issues

- VMFS datastore is not available after rebooting an ESXi host for ATS-only array devices

- If the target connected to the ESXi host supports only implicit ALUA and has only standby paths, the device will get registered, but device attributes related to media access will not get populated. It could take up to 5 minutes for the VAAI attributes to refresh if an active path gets added on post registration. As a result, the VMFS volumes, configured with ATS-only, may fail to mount until the VAAI update

The LED management commands might fail when you turn on or off them in the HBA mode. When you turn on or off the following LED commands

esxcli storage core device set -l locator -d

esxcli storage core device set -l error -d

esxcli storage core device set -l off -d ,

they might fail in the HBA mode of some HP Smart Array controllers, for example, P440ar and HP H240. In addition, the controller might stop responding, causing the following management commands to fail:

LED management:

esxcli storage core device set -l locator -d

esxcli storage core device set -l error -d

esxcli storage core device set -l off -d

Get disk location:esxcli storage core device physical get -d

This problem is firmware specific and it is triggered only by LED management commands in the HBA mode. There is no such issue in the RAID mode.

The vSAN disk serviceability plugin lsu-lsi-lsi-mr3-plugin for the lsi_mr3 driver might fail with Can not get device info … or not well-formed … error. The vSAN disk serviceability plugin provide extended management and information support for disks. In vSphere 6.5 Update 1 the commands:

Get disk location:

esxcli storage core device physical get -d for JBOD mode disk.

esxcli storage core device raid list -d for RAID mode disk.

Led management:

esxcli storage core device set --led-state=locator --led-duration= --device=

esxcli storage core device set --led-state=error --led-duration= --device=

esxcli storage core device set --led-state=off -device=

might fail with one of the following errors:

Plugin lsu-lsi-mr3-plugin cannot get information for device with name .

The error is: Can not get device info … or not well-formed (invalid token).

Virtual Machine Management Issues

- VMware Host Client runs actions on all virtual machines on an ESXi host instead on a range selected with Search

- When you use Search to select a range of virtual machines in VMware Host Client, if you check the box to select all, and run an action such as power off, power on or delete, the action might affect all VMs on the host instead only the selection.

VMware Esxi 6.5 U1 Isses Fixed

VMware Esxi 6.5 U1 build number 5969303 in VMware Esxi 6.5 U3 Or-later versions. VMware Esxi 6.5 U1 fixed issues are:

The ESXi 6.5 U3 or later releases includes the following list of new features.

- The ixgben driver adds queue pairing to optimize CPU efficiency.

- With ESXi 6.5 Update 3 you can track license usage and refresh switch topology.

- ESXi 6.5 Update 3 provides legacy support for AMD Zen 2 servers.

- Multiple driver updates: ESXi 6.5 Update 3 provides updates to the lsi-msgpt2, lsi-msgpt35, lsi-mr3, lpfc/brcmfcoe, qlnativefc, smartpqi, nvme, nenic, ixgben, i40en and bnxtnet drivers.

- ESXi 6.5 Update 3 provides support for Windows Server Failover Clustering and Windows Server 2019.

- ESXi 6.5 Update 3 adds the com.vmware.etherswitch.ipfixbehavior property to distributed virtual switches to enable you to choose how to track your inbound and outbound traffic, and utilization. With a value of 1, the com.vmware.etherswitch.ipfixbehavior property enables sampling in both ingress and egress directions. With a value of 0, you enable sampling in egress direction only, which is also the default setting.

Upgrade Esxi on Nutanix HCI Platform

If you running VMware Esxi hypervisor version 6.5 U1 Build Number 5969303 on Nutanix Acropolis Cluster then you have to upgrade the VMware Esxi 6.5 U1 to 6.7 U.x as per your hardware vendor compatibility on Nutanix platform. Please refer the Nutanix AOS Vs VMware Esxi version Comparability matrix on Nutanix Portal.

Prerequisites before the upgrade the Esxi on Nutanix HCI platform

- HA Must be enabled.

- DRS must be fully automated.

- Admission Control must be disabled

- All ESXi host must have vCenter connectivity

- All ESXi must be managed by vCenter

Upgrade Esxi on Nutanix HCI Cluster

If you have Nutanix Certified Nutanix NX hardware series, Lenovo HX hardware, Dell XC hardware and running VMware Esxi version 6.5 U1 build number 5959303 then you can upgrade it to AOS version 5.10.2 later version with compatible ESXi version to 6.5 U3 or later up to 6.7 U.x.

Note : check AOS and VMware Esxi compatibility matrix on Nutanx Portal.

Nutanix AOS and ESXi hypervisor upgrade procedure is below:

Step 1: Check Nutainx ESXi Cluster health status from Nutanix Prism

Menu > Health > Action > Run NCC Checks

When Nutanix cluster Health status and Resiliency Status is OKAY then proceed to step 2.

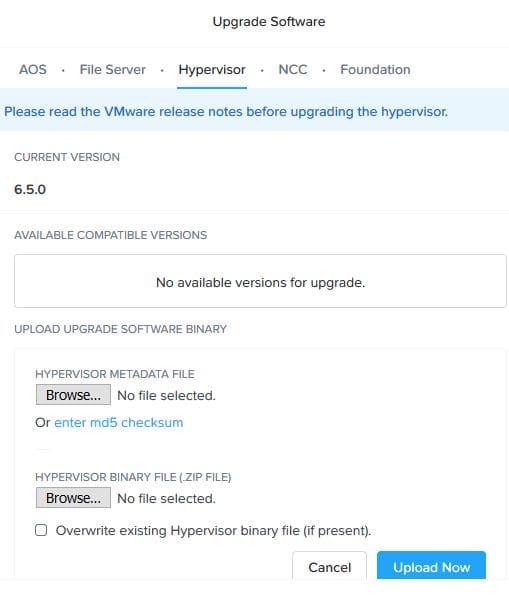

Step 2: Go to Settings icon > Software Upgrade > Hypervisor

Upload the Json file and VMware ESXi hypervisor offline bundle.zip file

Step 3: Click on to Pre-Check then Upgrade the ESXi Hypervisor

Step 4: Check the Nutanix Cluster Status Again.

Conclusion

Nutanix HCI platform is hypervisor agnostic compatible with VMware ESXi 5.x to latest version, Hyper-V, Citrix Xen and Nutanix Acropolis Hypervisor AHV, all hypervisors can be upgrade through one-click upgrade on Nutanix Acropolis Cluster without any downtime.

I’m Manish Kumar, founder of HyperHCI.com and a senior IT consultant with 13+ years of experience in infrastructure design and cybersecurity. An official certified SME for ISC2 and Nutanix, Also, certified in CISSP, CompTIA Security+, VMware and AWS. My expertise covers HCI, virtualization, cloud computing, network and security across Nutanix, VMware, and AWS platforms Read more